Abstract

Data has been identified as a valuable input to boost enterprises. Nowadays, with the vast quantity of data available, a favorable scenario is established to exploit it, but crucial challenges must be addressed, highlighting its sharing and governance. In this context, the data space ecosystem is the cornerstone which enables companies to share and use valuable data assets. However, appropriate Data Governance techniques must be established to benefit from such opportunity considering two levels: internal to the organization and at the level of sharing between organizations. At a technological level, to reach this scenario, companies need to design and provision adequate data platforms to deal with Data Governance in order to cover the data life-cycle. In this chapter, we will address questions such as: How to share data and extract value while maintaining sovereignty over data, confidentiality, and fulfilling the applicable policies and regulations? How does the Big Data paradigm and its analytical approach affect correct Data Governance? What are the key characteristics of the data platforms to be covered to ensure the correct management of data without losing value? This chapter explores these challenges providing an overview of state-of-the-art techniques.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Today we find ourselves in a completely digital world where content generated by devices and people increases every second. People are witnessing an explosion in the types, volume, and availability requirements of the different data sources. To get an idea of the magnitude of the problem and its growth, the data generated during 2 days in 2011 is larger than the accumulated from the origin of the civilization to the beginning of 2003. Therefore, we can ensure without any doubt that we live in the era of information. Consequently, a wide range of technologies associated with this term have emerged.

Nevertheless, this new concept not only has this technological vertical and information volume, it represents what has come to be called the power of data. Big Data is more than just a technological revolution and its use raises a radical transformation of mentality and business model in companies. Its purpose is to take advantage of the incalculable value of the data collected from different internal and external sources, such as customers, products, and operations to successfully optimize main business process performance or even to generate new business opportunities and models. Due to this phenomenon, organizations must face new challenges to go beyond what traditional tools are reporting with their information when analyzing, discovering, and understanding their data. Besides a matter of size, it is a change in mentality, a vision to boost business opportunities driven by data. In fact, companies are already leveraging the power of data processing to better understand their customers’ profiles, needs, and feelings when interacting with their products and services. Now, it is time to explore the next level and identify new business opportunities and initiatives based on the study, exploitation, and sharing of data.

Big Data represents a new era in the use of data, and together with new paradigms such as Internet of Things (IoT) or Artificial Intelligence (AI), the relevance and value of data have been redefined. Organizations are becoming more and more aware of the notion of “data as an asset,” but, on the other hand, they are also starting to be conscious of the need to report a “single version of the truth” [46] to take advantage of the opportunity of deploying business around data. But besides possible benefits, in this new scenario a number of additional challenges must be addressed to extract trustworthy and high-quality data.

The most frequent barriers to the successful exploitation of data are the diversity of sources and types of data, the tremendous volume of data, the disparate approaches and use cases, and the different processing and storage flows with which they can be implemented, in addition to the high volatility and the lack of unified data quality standards. Moreover, these problems are multiplied when considering open scenarios where different data agents or organizations interact, and beyond that, at macro level, when national or international strategies, policies, and regulations must be taken into consideration. In this context, the concept of Data Governance [48], which has existed for decades in the ICT field, has come to the fore in this realm. Data Governance should be understood as all those supporting mechanisms for decision making and responsibilities for processing related with information. Previous work must be accomplished to clearly identify which models will be established and who can take the actions, what data is going to be used, when it is going to be taken, and with what methods will be described. Data Governance concerns any individual or group that has any interest in how data is created and how it is collected, processed, manipulated, stored, and made available for use/exploitation during the whole life-cycle.

In the last decades, many efforts [49] have been dedicated to the specification of frameworks and standards in which the methodology and indicators for adequate government of traditional data processes are defined. Nowadays, these traditional approaches are insufficient due to the complex scenarios that arise where sharing, control of use, or ethical aspects become relevant. For instance, in [2] Alhassan et al. discover more than 110 new activities related with Data Governance that need to be acted upon in current data ecosystems. In contrast, there are certain aspects of Data Governance that remain unchanged, such as the establishment of clear objectives and the management of two essential levels of definition. The objectives are the following:

-

Ensure that data meets the needs of the organization as well as other applicable requirements (i.e., legal issues).

-

Protect and manage data as a valuable asset.

-

Reduce data management costs.

Derived from these objectives, considerations which are grouped into the following two levels must be implemented:

-

1.

Organizational level: The change of mentality of the organizations, involving three key concepts: data, participants, and processes, and the necessary legal, regulatory, administrative, or economic areas of action to establish an adequate Data Governance methodology.

-

2.

Technological level: The technological platform that must be deployed to make Data Governance viable. In this chapter, the reference key architectures and the general data platform concepts are detailed in later sections.

In this chapter, new prospects for Data Governance will be defined: sovereignty, quality, trust and security, economics and ethics, as well as the objectives, guiding principles, methodologies, and agents that are necessary to contribute in an environment as complex as Big Data and data ecosystems.

In the described scenario, three key conditioning factors must be highlighted: (1) the availability of large and heterogeneous volumes of internal and external data, (2) the interest of companies in exploiting them through new approaches, and (3) the study of new methodologies and areas of action to ensure its correct governance. These three points together shape what has been called Big Data ecosystems, a new wave of large-scale data-rich smart environments. These Data Spaces or ecosystems present new challenges and opportunities in the design of the architecture necessary to implement them, as stated in the work presented by Curry et al. [23]. Therefore, in this chapter, the possibilities and challenges of these new ecosystems are introduced taking into consideration aspects such as the challenges that sovereignty presents in shared spaces and how it is a pressing need to enable the “wealth of data” through governing policies or agreements of use.

Finally, a crucial aspect that all Data Governance approaches must cover cannot be forgotten, the data life-cycle. Thanks to the life-cycle and its correct definition, data processes can be better understood considering the nature and the phases they require to become a valuable asset. In recent years, this life-cycle concept has been perfected and specialized to address large volumes of data, and, as a result, emerging new phases have appeared to embrace the five Vs of Big Data ecosystems (volume, velocity, variety, veracity, and value). At the same time, new life-cycle schemes more oriented to analysis using AI techniques have appeared. However, ensuring the life-cycle through Data Governance methodologies is not a simple task, and, unfortunately, in multiple organizations it has resulted in failure due to its large technological and application load. In recent times, the study of new ways of implementing Data Governance in a more practical way has proliferated, trying to reconstruct it as an engineering discipline to promote its integration in product development and adapt it to the data and software life-cycle. It is the set of good practices for Data Governance, known as DataOps. In this chapter, a section to deal with this new phenomenon is dedicated. Concretely, this section will expose, in a practical way, software engineering and analytical techniques that implement the life-cycle with correct Data Governance.

The chapter relates to the technical priorities in the implementation of Data Governance into Big Data platforms of the European Big Data Value Strategic Research and Innovation Agenda [77]. It addresses the horizontal concern Big Data Governance of the BDV Technical Reference Model. The chapter deals with the implementation of governance, as a cornerstone of Big Data platforms and Data Spaces, enablers of the AI, Data and Robotics Strategic Research, Innovation, and Deployment Agenda [78]. The specific contributions of this chapter can be summarized as follows:

-

1.

Specification of the concept of Data Governance in the scenario of large volumes of data in an external-internal organizational context, from two aspects: conceptual and technological

-

2.

Introduction of new practical paradigms for the implementation of data governance as a software engineering discipline: DataOps

-

3.

Review of existing technological stacks to implement tasks related with Big Data Governance

The rest of the manuscript is structured as follows: Sect. 2 and subsections therein provide the aforementioned definition of Data Governance in the context of the Big Data problems, detailing the new emerging environment of Data Spaces. Next, Sect. 3 delves into the phases of the Big Data life-cycle and its connotations for Data Governance, introducing new paradigms such as DataOps. Section 4 presents crucial architectural patterns for data sharing, storage, or processing that should be considered when dealing with the concept of Big Data Governance. The chapter ends with Sect. 5, where a set of conclusions and open challenges are summarized regarding Data Governance action areas.

2 Data Governance: General Concepts

Data Governance, in general terms, is understood as the correct management and maintenance of data assets and related aspects, such as data rights, data privacy, and data security, among others. It has different meanings depending on the considered level: micro, meso, and macro levels. At the micro level, or intra-organizational, it is understood as the internal managing focus inside an individual organization with the final goal of maximizing the value impact of its data assets. At the meso level, or inter-organizational, it can be understood as the common principles and rules that a group of participating organizations agree in a trusted data community or space for accessing or using data of any of them. At the macro level, it should be understood as a set of measures to support national or international policies, strategies, and regulations regarding data or some types of data. Even if Data Governance goals and scopes vary at micro, meso, or macro levels, in most practical cases the goals should be aligned and connected for the whole data life-cycle, being part of a governance continuum. As an example, the General Data Protection Regulation (GDPR) [33] is a governance measure at the macro level for European member states regarding personal data and the free movement of such data that requires its translation internally in organizations at the micro level to cope with it.

The culture of data and information management into an organization is a very widespread area of study [46, 48], and for years there have been multiple frameworks or standards [15] in which the methodology and indicators of a correct governance of traditional data are defined, such as:

-

DAMA-DMBOK [18]—Data Management Book of Knowledge. Management and use of data.

-

TOGAF [44]—The Open Group Architecture Framework. Data architecture as part of the general architecture of a company.

-

COBIT [25]—Control Objectives for Information and Related Technology. General governance of the information technology area.

-

DGI Data Governance Framework [71]. It is a simple frame of reference to generate Data Governance.

In environments as complex and heterogeneous as it is in the case of Big Data ecosystems, the traditional version of governance is not enough and this concept of “government and sovereignty of data” needs an adaptation and redefinition, as it is studied in the works presented in [1, 54, 69, 70]. All these works present common guidelines, which are the general definition of the term “Big Data Governance,” the identification of the main concepts/elements to be considered, and the definition of certain principles or disciplines of action which pivot or focus on the methodology. From the detailed analysis of these studies, we can therefore establish the appropriate background to understand the governance of data on large volumes, starting by selecting the following definition:

Big Data Governance is part of a broader Data Governance program that formulates policy relating to the optimization, privacy, and monetization of Big Data by aligning the objectives of multiple functions. Sunil Soares, 2013

An emerging aspect that is complementing all these works of Data Governance is being developed when several participants are sharing information in common spaces, or Data Spaces, and the business models that can arise from these meso scenarios.

The governance in a data space sets the management and guides the process to achieve the vision of the community, that is, to create value for the data space community by facilitating the finding, access, interoperability, and reuse of data irrespective of its physical location, in a trusted and secure environment. For each participant of the community, this implies that the authority and decisions that affect its own data assets should be orchestrated also with the rights and interests of other collaborating participants of the data space ecosystem.

The governance in this meso level should provide the common principles, rules, and requirements for orchestrating data access and management for participants according to the different roles they may play within the data space ecosystem. It is implemented with a set of overlapping legal, administrative, organizational, business, and technical measures and procedures that define the roles, functions, rights, obligations, attributions, and responsibilities of each participant.

Some of the principles that are usually considered for the governance of a data space are:

-

General conditions for participation and data sharing within the data space in the manner that it is intended for this space, including aspects such as confidentiality, transparency, fair competence, non-discrimination, data commercialization and monetization, (the exercise of) data property rights provision and protection, etc. These conditions could be administrative, regulatory, technical, organizational, etc.

-

Conditions for the protection and assurance of the rights and interests of data owners, including data sovereignty to grant and, eventually, withdraw consent on data access, use, share, or control.

-

Compliance of the rules and requirements of the data space with the applicable law and prevention of unlawful access and use of data; for instance, regarding personal data and highly sensitive data, or the requirements of public policies, such as law enforcement related to public security, defense or criminal law, as well as other policies, such as market competition law and other general regulations.

-

Specific provisions that may be made for certain types of participants and their roles in the data space, such as the power to represent another participant, the power to intermediate, the obligation to be registered, etc.

-

Specific provisions that may be made for certain types and categories of data in the data space, like the anonymization and other privacy-proof techniques of personal data, the grant to reuse public owned data under certain conditions, the grant to be processed under certain administration, etc.

Some of the types of rules and requirements that are used in the governance of a data space are:

-

Specific technical, legal, or organizational requirements that participants may comply with, depending on their specific role in the ecosystem: the publication of associated metadata to data assets with common vocabularies, to follow specific policies, to adopt some specific technical architectures, etc.

-

Conditions, rules, or specific arrangements for data sharing among participants of the data space, as well as specific provisions that may be applicable to the processing environment or to certain types of data. Note that data sharing does not necessarily imply the transfer of data from one participating entity of the data space to another.

-

Access or use policies or restrictions that may be applied to certain types of data during the full life-cycle.

-

The inclusion of compliance and certification procedures at different levels: participants, technologies, data, etc.

-

Conditions, rules, or specific arrangements for, eventually, data sharing with third parties. For instance, one of the participants may need to share some data under the governance of the data space with a third party which is not subject to data space governance.

In addition to these micro and meso governance levels, there would be also a macro level where public administration bodies, from local to international ones, may set specific governing measures and mechanism to support their strategies, in many cases as an alternative to the de facto models of the private sector and, in particular, of big tech companies. The previously mentioned GDPR or the directive on open data and the re-use of public sector information, also known as the “Open Data Directive,” are examples of the measures of the specific legislative framework that the European Union is putting in place to become a leading role model for a society empowered by data to make better decisions—in business and the public sector. As these perspectives of Data Governance are being developed independently, there is a potential break in continuity among them. In order to close the gap and create a governance continuum, it is necessary to support its coverage from, ideally, both the organizational and technical perspectives.

In Fig. 1, we synthesize our vision of the components that should be considered in an open, complex Data Governance continuum, defining two levels: organizational and technological.

In the organizational section we consider two important aspects, the governance dimensions and perspectives. Dimensions refer to the scope of governance, either internal or within the organization, or external affecting ecosystems formed by multiple participants, such as Data Spaces. Perspectives refer to the areas of action, which are those tasks or fields that the governance measures and mechanisms must cover.

In the work of Soares et al. [69], a Big Data Governance framework was introduced, with the following disciplines: organization, metadata, privacy, data quality, business process integration, master data integration, and information life-cycle management. Based on these disciplines, the proposed governance perspectives of Fig. 1 are grouped into the following themes:

-

1.

Ownership and sovereignty [40]. The data ownership is an important aspect when the intention is offering data and negotiating contracts in digital business ecosystems. And the associated term “data sovereignty” indicates the rights, duties, and responsibilities of that owner.

-

2.

Trust, privacy, and security [64]. The data throughout its entire life-cycle must be safe and come to add value without being compromised at any point. For this, it is important that throughout the cycle all participants are trusted.

-

3.

Value [28]. New digital economic models based on data as an asset of organizations are required. The concept of monetization of data, which seeks using data to increase revenue, is essential in this perspective.

-

4.

Quality and provenance [75]. Data quality refers to the processes, techniques, algorithms, and operations aimed at improving the quality of existing data in companies and organizations, and associated with this comes the provenance, which indicates the traceability or path that the data travels through the organization.

-

5.

Ethics [63]. Refers to systematizing, defending, and recommending concepts of correct and incorrect conduct in relation to data, in particular, personal data.

This list extends Soares‘s work with security aspects, and life-cycle management has been considered independently as a crosscutting element, and it is covered in Sect. 3 of this chapter. Finally, it is important to reconcile the methodology defined at the conceptual level with the technical implementation, including its components and modules in adequate reference architecture. In this chapter, three reference architecture approaches have been considered, according to their main mission: sharing, storage, or processing of data. An explanation with detailed examples of each theme is introduced in Sect. 4.1. The materialization of the architecture or possible combinations of architectures, with a selection of effective technologies that meet the requirements of data and information governance, have been identified as a data platform.

3 Big Data Life-Cycle

As mentioned in the previous section, Data Governance should improve data quality, encourage efficient sharing of information, protect sensitive data, and manage the data set throughout its life-cycle. For this reason, a main concept to introduce within the Data Governance is the life-cycle. This is not a new concept, but it must be adapted with the five Vs of Big Data characteristics [67]. The Big Data life-cycle is required in order to transform the data into valuable information, due to its permits that data can be better understood, as well as, a better analysis of its nature and characteristics.

In Fig. 2 you can see a life-cycle scheme, composed of five phases: collection, integration, persistence, analysis, and visualization and how each of these phases in the case of a Big Data ecosystem should be adapted to offer a response to the requirements imposed by the five Vs [59]: volume, velocity, variety, veracity, and value.

Data life-cycle management is a process that helps organizations to manage the flow of data throughout its life-cycle—from initial creation through to destruction. Having a clearly defined and documented data life-cycle management process is key to ensuring Data Governance can be conducted effectively within an organization. In the Big Data ecosystem, there are already approaches such as that of Arass et al. [11] that try to provide frameworks to carry out this management, but there is still some work to be done. In the next sections, a series of new software engineering disciplines that try to pave this way are introduced, such as DataOps.

3.1 DataOps

Big Data security warranty is associated with a correct establishment of privacy and “good Data Governance” policies and methodologies. However, the technology dimension and stack of Big Data tools are enormous, and most of them have arisen without taking into account the requirements and necessary components to implement an adequate Data Governance policy.

In this context, DataOps was born as a variant of the software engineering discipline, which tries to redirect the strict guidelines established in the typical Data Governance methodologies into a set of good practices that are easy to implement technologically. In the work [26] presented by Julian Ereth, a definition is proposed:

DataOps is a set of practices, processes and technologies that combines an integrated and process-oriented perspective on data with automation and methods from agile software engineering to improve quality, speed, and collaboration and promote a culture of continuous improvement. Julian Ereth, 2018

In our opinion, we believe that this definition has to be outlined in two important points:

-

1.

Establish two basic guiding principles [12]: (a) improve cycle times of turning data into a useful data product, and (b) quality is paramount, a principle that requires practices for building trustworthy data.

-

2.

Integrate a new “data component” team to traditional DevOps teams. This team is in charge of all data-related tasks and primarily comprised of the roles of data providers, data preparers, and data consumers.

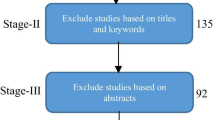

The main scopes of DataOps in Big Data environments can be seen in Fig. 3. The objective is to interconnect all these specialties with each other and with the traditional tasks of the life-cycle.

With DataOps automation, governance can execute continuously as part of development, deployment, operations, and monitoring workflows. Governance automation is one of the most important parts of the DataOps movement. In practice, when Data Governance is backed by a technical platform and applied to data analytic, it is called DataOps. To achieve this, components and tools are required to develop or support these automation processes being part of the implementation of the platform. Ideally, many of these modules will use AI techniques to achieve greater intelligence and level of automation.

In this field, it is important to highlight how large and important companies and organizations are working on this type of governance implementation using the DataOps discipline:

-

1.

Uber [72] has a dedicated project called Michelangelo that helps manage DataOps in the same way as DevOps by encouraging iterative development of models and democratizing the access to data and tools across teams.

-

2.

Netflix [13] follows a DataOps approach to manage its historical data and their model versioning in the context of its tool of content recommendation.

-

3.

Airbnb [17] controls the features engineering and parameters selection process over the models that they generated to improve search rankings and relevance, using a DataOps approach.

In conclusion, DataOps [55] aims to increase speed, quality, and reliability of the data and the analytic processes around it by improving the coordination between data science, analytic, data engineering, and operations.

4 Big Data Technologies Under Governance Perspective

As the Big Data paradigm has taken hold, so have the possible technological options to implement it. This gives rise to an endless number of systems and tools that try to provide solutions for addressing tasks in the data life-cycle; it is the so-called Big Data ecosystem [36, 53]. The way these solutions are bundled together into complete and effective product suites is commonly referred as Big Data platforms [30].

The technological origins of Big Data platforms are found in the Hadoop framework. Apache Hadoop originated from a paper on the Google file system published in 2003 [31]. Nowadays, Hadoop distributions continue to evolve, and from their base emerge increasingly efficient and comprehensive data or analytics platforms, as it will be explained in the next sections in further detail. The main reason is that as the this paradigm has matured, the diversity of use cases and their complexity have also increased.

However, one of the main underlying problems is that security technologies in these environments have not been developed at the same pace, and, consequently, this area has become a challenge. Concretely, governance has been a lately addressed issue, and currently it still poses many unresolved challenges. Therefore, in this section we focus on Big Data technologies, since they are currently under development and evolution to solve associated risks that do not occur in normal volumes of data, for example, the associated technical, reputational, and economic risks posited by Paul Tallon in special issue [52]. In the next sections, the current state of technology (see Sect. 4.2) is examined, and at the end of the chapter, a series of challenges are presented which even nowadays remain without a clear solution.

4.1 Architectures and Paradigms

In this section, Big Data platforms will be analyzed from different perspectives. First, architecture paradigms for data sharing in data ecosystems followed by storing of Big Data are analyzed, and then, computing paradigms are examined in detail.

4.1.1 Architectures for Data Sharing

There are different attempts in the literature to define reference architectures around the concept of data sharing in distributed environments. The approaches vary significantly according to their characteristics such as security conditions, purpose of sharing, or technological focus and especially depending on the domain of application to which they are addressed. Below, the most important ones trying to cover different domains are named:

-

Industry 4.0. There are several initiatives at the international level where attempts are being made to organize the paradigm in an easily understandable and widely adopted reference architecture. This is the case of the International Data Spaces (IDS) architecture promoted by the German-based IDS Association (IDSA) [41], the Reference Architecture Model Industry 4.0 (RAMI4.0) [66] developed by the German Electrical Manufacturer’s Association, and Electronic and Industrial Internet Reference Architecture (IIRA) of the Industrial Internet Consortium (IIC) [50]. Among all of them, the leading reference architecture in Europe, IDSA, stands out as optimal in terms of its capabilities to represent data security and sovereignty needs.

-

Health domain. Data privacy is the central axis of most of the studies. An example is that of article [76], where a system is presented addressing the problem of the exchange of medical data between medical repositories in an environment without trust.

-

Smart cities. Regarding this new paradigm of data sharing approaches, article [20] proposes a trust model to share data in smart cities.

-

Social and cultural domains. In this domain, digital content sharing environments are considered, such as social networks, e-governance, or associations around research/cultural data. Article [39] provides a systematic mechanism to identify and resolve conflicts in collaborative social network data.

-

It is worth mentioning the European project Gaia-X [19] is devoted to promote a secure, open, and sovereign use of data. It promotes the portability, interoperability, and interconnectivity within a federated infrastructure of data while also preserving the European values of openness, transparency, trust, and sovereignty, among others. Gaia-X’s vision is to enable companies to share their data in ways they control. And to achieve an enabling infrastructure for this objective, Industrial Data Space (IDS) appear. For this reason, the IDS standard, which enables open, transparent, and self-determined data exchange, is a central element of the Gaia-X architecture.

4.1.2 Architectures for Data Storage

Traditionally to drive complex analysis on different data sources, there exist a great number of architectural patterns to store data in a centralized fashion. But the requirements and needs of today’s large volumes of data make new distributed approaches necessary. Below, the three main architectural patterns that have been deployed in recent years by large corporations are presented: data warehouse, which follows a centralized approach in which data normally resides in a single repository, and data hub and data lake, where data can be stored in a decentralized manner, with physically distributed repositories sharing a common access point.

The data warehouse concept was originated in 1988 with the work of IBM researchers, later William H. Inmon [43], describing it as A collection of data oriented to a specific, integrated, time-varying and non-volatile subject that supports the decision-making process. As a general rule, this system is used mainly for reporting and data analysis. The repository can be physical or logical and emphasizes capturing data from various sources primarily for access and analytical purposes. The typical components of any data warehouse are as follows:

-

ETL process: component that allows preprocessing operations on the data so that they can be persisted using a correct structure

-

Central repository of the data warehouse, a central area where the data is stored

-

Views or DataMarts: logical views of the data

Traditionally, corporate servers are used to allocate the data warehouse, but in the last years, with the boom of the cloud providers, enterprises are starting to deploy them in the cloud. As examples of data warehouse in the cloud, Amazon Redshift [3], Microsoft Azure Synapse Analytics [14], Google BigQuery [34], and Snowflake [68] can be highlighted.

The second approach is the data hub [16]. In this case, it does not mean that the data is centralized under the same structure, but that there is a central point of access to them. The difference is significant since the data is not what is centralized, but the information about it is the metadata. The main objective is to be able to integrate all the information about the data in the same point taking into account the different business needs that a company may have. In this case, data can be physically moved and have the ability to be ordered again in a different system, since its metainformation continues to be kept in a single point.

Finally, the datalake [27] architecture is presented, a clearly analytical approach, which is also the most modern, referring to a repository of data stored in its natural raw format, generally objects or files. Datalake is named for the metaphor that the data is in “water,” which is a transparent and clear substance, so they are preserved in a natural state and not modified. What it is meant by this is that the data is original and there is a storage where a large amount of information of all kinds is together, from structured to unstructured data. It is an architectural design pattern that favors the use of data in analytical processes.

Datalakes contain a large amount of raw data kept there until needed. Unlike a hierarchical data warehouse that stores data in files or folders, a datalake uses a flat architecture to store data. The term is being accepted as a way to describe any large data set in which the schema and data requirements are not defined until the data is queried which is called as schema on reading.

Each element in a datalake is assigned a unique identifier and is tagged with a set of extended metadata tags. In this way, when a business issue needs to be resolved, the datalake can be queried for identifying data related to that issue. Once obtained, the data acquired can be analyzed to help organizations obtain a valuable answer.

A datalake is made up of two clear components: the repository of raw data and the transformations that allow adapting the data to the structure necessary for further processing.

In Table 1 a comparative of these three approaches is provided. In the case of choosing an analysis-oriented approach for specific use cases, the most appropriate option to consider would be the data warehouse. By contrast, if the analysis has not been defined and should be as open as possible to future use cases, the chosen option should be the datalake pattern, and finally, if the possible data sources or the purpose of using them is not clear, the best option would be data hub. In article [62], the benefits and disadvantages of each of the three architectural patterns are clearly exposed.

In order to boost Data Governance good practices, when utilizing these three storage paradigms, we propose some considerations:

-

When a new data source is integrated, access control policies should be defined identifying who should have access, with which privileges, and to which content.

-

For data lakes, all data sources should be defined in a centralized data catalog to avoid silos and to facilitate its access and discovery. In addition, relevant metainformation of each data source should be included to allow adequate filters and to improve classification mechanisms.

-

A usage control policy should be defined for each data source to clearly identify aspects such as privacy fields that require anonymization, expiration of the data, and additional required operations that should be considered when treating the data source.

-

An intelligent mechanism for detecting duplicated data and for analyzing data quality would be really useful to provide better insights.

-

Encryption techniques should be considered when storing data.

The implementation of the aforementioned architectures can be achieved by using different technologies, some of which may be distributed such as file systems or NOSQL databases. An example of the former would be SAN systems, which offer distributed block storage between different servers. And some other feasible examples would be GlusterFS [32] for POSIX-compliant storage or the well-known Hadoop HDFS [35] which is able to leverage data locality for different types of workloads and tools.

4.1.3 Architectures for Data Processing

In the last decade, there has been a tendency for acquiring more and more data. In this context, traditional technologies, like relational databases, started to experience performance issues not being able to process and manage big quantities of data, with low latency and diverse structures. As a consequence, certain paradigms such as MapReduce [22] faced the problem by dividing the data in several machines, then processing data isolated in each machine (when possible), and finally merging the results. This way, distributed computing is enabled. In addition, in this paradigm data can be replicated among a set of machines to get fault tolerance and high availability. This approach paves the way for Big Data technologies enabling batch processing architectures.

As defined firstly in 2011 by [38] and in [29], the Lambda architecture is a generic, scalable, and fault-tolerant real-time processing architecture. Its general purpose consists of applying a function over a dataset and extracting a result with low latency. However, in some scenarios, the computation of raw data tends to derive in heavy processes; as a consequence, it is proposed to perform the specific queries over already preprocessed data to minimize the latency and get a real-time experience. For this objective, a speed layer can be supported from a batch layer to provide such preprocessed data, and finally, a serving layer is proposed to query over data merged from both batch and speed layers.

Later, in 2014, Kreps [47] questioned the disadvantages of the Lambda architecture and proposed the Kappa architecture, a simplified perspective where the batch layer was eliminated from the equation. One of the main advantages of this architecture is that there is no need for implementing two heterogeneous components for batch and speed layer. As a consequence, the development phases of implementation, debugging, testing, and maintenance are significantly simplified. By contrast, not having the batch layer hinders (or makes impossible) the execution of heavy processes since the data preprocessing conducted in that layer for the speed layer in the Lambda architecture cannot be done. Consequently, in the Kappa architecture the speed layer might execute heavier processes making it more inefficient.

The conclusion is that the processing architecture should be selected taking the type of problem to resolve into consideration. For executing heavy workloads without requiring real-time decisions, the batch architecture could be enough. On the contrary, when only real-time data is required and there is no need to provide historical data and, consequently, data processed can be discarded, Kappa architecture is adequate. Finally, when both heavy processes and data in real time are required, Lambda architecture should be selected.

Besides the governance considerations exposed in the previous subsection, when processing data, it is highly recommended to be able to track the operations performed over each data source in order to provide an adequate data lineage and to supply a robust audit mechanism.

4.2 Current Tool Ecosystem for Big Data Governance

Currently, there are a number of useful tools that cover Data Governance requirements for Big Data platforms to some extent. This subsection examines the more successful ones.

Kerberos [45] is an authentication protocol that allows two computers to securely prove their identity to each other. It is implemented on a client server architecture and works on the basis of tickets that serve to prove the identity of the users.

Apache Ranger [9] improves the authorization support for Hadoop ecosystems. It provides a centralized platform to define, administer, and manage security policies uniformly across many Hadoop components. In this way, security administrators can manage access control editing policies with different access scopes such as files, folders, databases, tables, or columns. Additionally, Ranger Key Management Service (Ranger KMS) offers scalable encryption in HDFS. Moreover, Ranger also gives the possibility of tracking access requests.

Apache Knox [7] Gateway offers perimeter security to allow data platforms to be accessed externally while satisfying policy requirements. It enables HTTP access using RESTful APIs to a number of technologies of the stack. For this purpose, Knox acts as an HTTP interceptor to provide authentication, authorization, auditing, URL rewriting, web vulnerability removal, and other security services through a series of extensible interceptor processes.

Apache Atlas [4] offers a set of governance utilities for helping companies in fulfilling their compliance requirements in the Hadoop data ecosystem. It allows managing the metadata to better catalog company data enabling classification and collaboration support for different profiles of a team.

As stated in [61], Cloudera Navigator [21] provides a set of functionalities related with Data Governance in the context of Big Data platforms. Concretely, it enables metadata management, data classification, data lineage, auditing, definition of policies, and data encryption. In addition, it can be integrated with Informatica [42] to extend the lineage support outside a data lake.

Additional technologies were going to be integrated in this section but, unfortunately, some of them, like Apache Sentry, Apache Eagle and Apache Metron, have been recently moved to the Apache Attic project, which means that they have arrived to its end of life.

Regarding technologies related to the task of data exchange and sharing, we can highlight that almost all solutions and approaches are in initial versions. Then we name as examples (a) certain platforms that have begun to investigate this field, Snowflake, (b) solutions related to data sharing in the cloud, and (c) finally technologies related to data usage control.

Snowflake [68] is yet another tool that offers Data Governance capabilities. In particular, one of its features is to securely and instantly share governed data across the business ecosystem. Sharing data within the same organization has also evolved over the years, and cloud-based technologies (e.g., ownCloud [58], OneDrive [56]) have replaced shared folders as the preferred method for sharing documentation.

Today, there are multiple technologies related to secure access control, such as Discretionary Access Control (DAC), Mandatory Access Control (MAC), Role-Based Access Control (RBAC), or Attribute-Based Access Control (ABAC); a comparative analysis can be found in the survey [73]. But their scope is limited to access and therefore they do not solve challenges encountered in the task of data sharing. For this task, distributed use control technologies are necessary. To our knowledge, there are not many research works in this field and the existing ones have failed to provide mature technological solutions to the industry [60]. For example, within the context of the IDS architecture [57], new solutions are being considered and in some cases developed that allow the control of data use in distributed storage platforms. Specifically, the technologies currently under development are LUCON [65], MYDATA-IND2UCE [37], and Degree [51], but all three are in the early stages of maturity.

As stated in this subsection and summarized in Table 2, besides the cloud proprietary solutions, there exist certain Big Data tools supporting governance aspects. However, there is still some missing governance support such as editing control of use policies for boosting the sovereignty, identifying duplicated data, checking the quality and veracity, or anonymizing sensible data when shared.

5 Conclusion and Future Challenges

In an environment as complex as Big Data, it is not easy to establish adequate Data Governance policies, and this difficulty is increased when the tools that contribute or help in this task have not followed the same level of growth as the Big Data technological stack. In this chapter, these facts have been presented and an attempt has been made to provide some light to pave the way for those companies or organizations that wish to establish a Big Data Governance methodology. In order to accomplish this, the main contributions have been focused on providing an adequate definition of the Big Data environment, introducing new disciplines such as DataOps that materialize Data Governance from a perspective close to software engineering. Moreover, finally, a number of significant architectures and solutions useful for the technological implementation have been examined.

In addition, throughout the whole chapter, new challenges have been introduced that currently are not adequately addressed. In this section, a compilation of the most important ones is proposed, highlighting the possible future works that would arise in their field. The following list is an enumeration of these challenges to consider:

-

Usage control to warranty data sovereignty during data sharing process. The problem is how to technologically enable sharing while keeping sovereignty and the use of data safe and controlled. In article [74] this dilemma of finding the balance between correct regulation and technological disruption is posed. One of the main problems is what happens to the data once it leaves the repository or platform of the proprietary provider, such as controlling, monitoring, or tracing its use to facilitate future profitability. Until now, the concern was to protect access to data, but its use by potential consumers was not controlled, and, therefore, this is the greatest current challenge, especially at the technological level.

-

Data modeling is cool again. Data modeling can help generate more efficient processing techniques for the data collected. But nowadays the problems are on (a) how to balance modeling between not losing data quality and not generating unnecessary metadata that takes up extra storage and (b) how to directly extract semantic metadata from moving data streams.

-

New AI approaches and life-cycles. The new life-cycles associated with analytical processes developed with AI techniques have many challenges associated with the use of data, among which the following stands out: (a) heterogeneity in development environments and lack of uniformity or standards; with this we refer to the multiple techniques, libraries, existing frameworks, etc. (b) The high cost of infrastructure and the necessary storage scalability, for example, deep learning techniques require a very high sample volume, which leads to large repositories and high scalability.

-

Ethical Artificial Intelligence (AI). Explainability, defined as why models make given predictions or classifications, is a hot topic in this area. However, equally important and often overlooked are the related topics of model auditability and governance, understanding and managing access to models, data, and related assets.

-

Data literacy: data-driven culture company-wide. Understanding as enabling employees to derive meaningful insights from data. The objective is that the people of the organization are able to analyze situations from a critical perspective and be participants in an analytical culture based on the data. A very important point in this area, therefore, is the democratization and flow of data throughout the organization, a technological challenge that today is extremely complex to combine with access and privacy policies.

-

Data catalog: The new technological trend. Gartner [24] even refers to them as “the new black in data management and analytic” and now they are recognized as a central technology for data management. It is a central and necessary technology for the treatment of metadata, which allows giving meaning to the data. Gartner has defined a data catalog as a tool that “creates and maintains an inventory of data assets through the discovery, description and organization of distributed datasets.” And the real challenge in this technology is found in keeping the information in a data catalog up to date using automated discovery and search techniques.

-

Data Governance at the edge. The architectural challenge faces on the use of edge computing is where to store the data: at the edge or at the cloud. And associated with this challenge in architecture, how to implement Data Governance, access and auditing mechanisms adapted and efficient to this complex environment, made up of multiple infrastructure elements, technological components and network levels.

References

Al-Badi, A., Tarhini, A., & I. Khan, A. (2018). Exploring big data governance frameworks. Procedia Computer Science, 141, 271–277.

Alhassan, I., Sammon, D., & Daly, M. (2018). Data governance activities: A comparison between scientific and practice-oriented literature. Journal of Enterprise Information Management, 31(2), 300–316.

Amazon redshift, 2021. Retrieved February 8, 2021 form https://aws.amazon.com/es/redshif

Apache atlas, 2021. Retrieved February 8, 2021 form https://atlas.apache.org/

Apache attic, 2021. Retrieved February 8, 2021 form https://attic.apache.org/

Apache eagle, 2021. Retrieved February 8, 2021 form https://eagle.apache.org/

Apache knox, 2021. Retrieved February 8, 2021 form https://knox.apache.org/

Apache metron, 2021. Retrieved February 8, 2021 form https://metron.apache.org/

Apache ranger, 2021. Retrieved February 8, 2021 form https://ranger.apache.org/

Apache sentry, 2021. Retrieved February 8, 2021 form https://sentry.apache.org/

Arass, M. E., Ouazzani-Touhami, K., & Souissi, N. (2019). The system of systems paradigm to reduce the complexity of data lifecycle management. case of the security information and event management. International Journal of System of Systems Engineering, 9(4), 331–361.

Atwal, H. (2020). Dataops technology. In Practical DataOps (pp. 215–247). Springer.

Atwal, H. (2020). Organizing for dataops. In Practical DataOps (pp. 191–211). Springer.

Azure synapse analytics, 2021. Retrieved February 8, 2021 form https://azure.microsoft.com/es-es/services/synapse-analytics/

Begg, C., & Caira, T. (2011). Data governance in practice: The SME quandary reflections on the reality of data governance in the small to medium enterprise (SME) sector. In The European Conference on Information Systems Management (p. 75). Academic Conferences International Limited.

Bhardwaj, A., Bhattacherjee, S., Chavan, A., Deshpande, A., Elmore, A. J. Madden, S., & Parameswaran, A. G. (2014). Data hub: Collaborative data science & dataset version management at scale. arXiv preprint arXiv:1409.0798.

Borek, A., & Prill, N. (2020). Driving digital transformation through data and AI: A practical guide to delivering data science and machine learning products. Kogan Page Publishers.

Brackett, M., Earley, S., & Henderson, D. (2009). The dama guide to the data management body of knowledge: Dama-dmbok guide. Estados Unidos: Technics Publications.

Braud, A., Fromentoux, G., Radier, B., & Le Grand, O. (2021). The road to European digital sovereignty with Gaia-x and IDSA. IEEE Network, 35(2), 4–5.

Cao, Q. H., Khan, I., Farahbakhsh, R., Madhusudan, G., Lee, G. M., & Crespi, N. (2016). A trust model for data sharing in smart cities. In 2016 IEEE International Conference on Communications (ICC) (pp. 1–7). IEEE.

Cloudera navigator, 2021. Retrieved February 8, 2021 form https://www.cloudera.com/products/product-components/cloudera-navigator.html

Condie, T., Conway, N., Alvaro, P., Hellerstein, J. M., Elmeleegy, K., & Sears, R. (2010). Mapreduce online. In Nsdi (Vol. 10, p. 20).

Curry, E., & Sheth, A. (2018). Next-generation smart environments: From system of systems to data ecosystems. IEEE Intelligent Systems, 33(3), 69–76.

Data catalogs are the new black in data management and analytics, 2017. Retrieved March 16, 2021 from https://www.gartner.com/en/documents/3837968/data-catalogs-are-the-new-black-in-data-management-and-a

De Haes, S., Van Grembergen, W., & Debreceny, R. S. (2013). Cobit 5 and enterprise governance of information technology: Building blocks and research opportunities. Journal of Information Systems, 27(1), 307–324.

Ereth, J. (2018). Dataops-towards a definition. LWDA, 2191, 104–112.

Fang, H. (2015). Managing data lakes in big data era: What’s a data lake and why has it became popular in data management ecosystem. In 2015 IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER) (pp. 820–824). IEEE.

Faroukhi, A. Z., El Alaoui, I., Gahi, Y., & Amine, A. (2020). Big data monetization throughout big data value chain: A comprehensive review. Journal of Big Data, 7(1), 1–22.

Feick, M., Kleer, N., & Kohn, M. (2018). Fundamentals of real-time data processing architectures lambda and kappa. SKILL 2018-Studierendenkonferenz Informatik.

Ferguson, M. (2012). Architecting a big data platform for analytics. A Whitepaper prepared for IBM, 30.

Ghemawat, S., Gobioff, H., & Leung, S.-T. (2003). The google file system. In Proceedings of the Nineteenth ACM Symposium on Operating Systems Principles (pp. 29–43).

Glusterfs, 2021. Retrieved May 19, 2021 form https://www.gluster.org/

Goddard, M. (2017). The EU general data protection regulation (GDPR): European regulation that has a global impact. International Journal of Market Research, 59(6), 703–705.

Google big query, 2021. Retrieved February 8, 2021 form https://cloud.google.com/bigquery/

Hadoop hdfs, 2021. Retrieved May 19, 2021 form https://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-hdfs/HdfsDesign.html

Hamad, M. M., et al. (2021). Big data management using hadoop. In Journal of Physics: Conference Series (Vol. 1804, p. 012109).

Hosseinzadeh, A., Eitel, A., & Jung, C. (2020). A systematic approach toward extracting technically enforceable policies from data usage control requirements. In ICISSP (pp. 397–405).

How to beat the cap theorem, 2011. Retrieved February 8, 2021 form http://nathanmarz.com/blog/how-to-beat-the-cap-theorem.html

Hu, H., Ahn, G.-J., & Jorgensen, J. (2011). Detecting and resolving privacy conflicts for collaborative data sharing in online social networks. In Proceedings of the 27th Annual Computer Security Applications Conference (pp. 103–112).

Hummel, P., Braun, M., Augsberg, S., & Dabrock, P. (2018). Sovereignty and data sharing. ITU Journal: ICT Discoveries, 25(8).

Ids association, 2021. Retrieved March 14, 2021 form https://internationaldataspaces.org/

Informatica, 2021. Retrieved February 8, 2021 form https://www.cloudera.com/solutions/gallery/informatica-customer-360.html

Inmon, W. H. (1996). The data warehouse and data mining. Communications of the ACM, 39(11), 49–51.

Josey, A. (2016). TOGAF® version 9.1-A pocket guide. Van Haren.

Kerberos, 2021. Retrieved February 8, 2021 form https://www.kerberos.org/

Khatri, V., & Brown, C. V. (2010). Designing data governance. Communications of the ACM, 53(1), 148–152.

Kreps, J. (2014). Questioning the lambda architecture. Online article, July, 205.

Ladley, J. (2019). Data governance: How to design, deploy, and sustain an effective data governance program. Academic Press.

Lee, S. U., Zhu, L., & Jeffery, R. (2018). Designing data governance in platform ecosystems. In Proceedings of the 51st Hawaii International Conference on System Sciences.

Lin, S.-W., Miller, B., Durand, J., Joshi, R., Didier, P., Chigani, A., Torenbeek, R., Duggal, D., Martin, R., Bleakley, G., et al. (2015). Industrial internet reference architecture. Industrial Internet Consortium (IIC), Tech. Rep.

Lyle, J., Monteleone, S., Faily, S., Patti, D., & Ricciato, F. (2012). Cross-platform access control for mobile web applications. In 2012 IEEE International Symposium on Policies for Distributed Systems and Networks (pp. 37–44). IEEE.

Michael, K., & Miller, K. W. (2013). Big data: New opportunities and new challenges [guest editors’ introduction]. Computer, 46(6), 22–24.

Monteith, J. Y., McGregor, J. D., & Ingram, J. E. (2013). Hadoop and its evolving ecosystem. In 5th International Workshop on Software Ecosystems (IWSECO 2013) (Vol. 50). Citeseer.

Morabito, V. (2015). Big data governance. In Big data and analytics (pp. 83–104).

Munappy, A. R., Mattos, D. I., Bosch, J., Olsson, H. H., & Dakkak, A. (2020). From ad-hoc data analytics to dataops. In Proceedings of the International Conference on Software and System Processes (pp. 165–174).

Onedrive, 2021. Retrieved May 19, 2021 form https://www.microsoft.com/en-ww/microsoft-365/onedrive/online-cloud-storage

Otto, B., ten Hompel, M., & Wrobel, S. (2018). Industrial data space. In Digitalisierung (pp. 113–133). Springer.

owncloud, 2021. Retrieved May 19, 2021 form https://owncloud.com/

Pouchard, L. (2015). Revisiting the data lifecycle with big data curation.

Pretschner, A., & Walter, T. (2008). Negotiation of usage control policies-simply the best? In 2008 Third International Conference on Availability, Reliability and Security (pp. 1135–1136). IEEE

Quinto, B. (2018). Big data governance and management (pp. 495–506).

Quinto, B. (2018). Big data warehousing. In Next-generation big data (pp. 375–406). Springer.

Richards, N. M., & King, J. H. (2014). Big data ethics. Wake Forest Law Review, 49, 393.

Sänger, J., Richthammer, C., Hassan, S., & Pernul, G. (2014). Trust and big data: a roadmap for research. In 2014 25th International Workshop on Database and Expert Systems Applications (pp. 278–282). IEEE.

Schütte, J., & Brost, G. S. (2018). Lucon: Data flow control for message-based IoT systems. In 2018 17th IEEE International Conference On Trust, Security And Privacy In Computing And Communications/12th IEEE International Conference On Big Data Science And Engineering (TrustCom/BigDataSE) (pp. 289–299). IEEE.

Schweichhart, K. (2016). Reference architectural model industrie 4.0 (rami 4.0). An Introduction. https://www.plattform-i40.de

Sinaeepourfard, A., Garcia, J., Masip-Bruin, X., & Marín-Torder, E. (2016). Towards a comprehensive data lifecycle model for big data environments. In Proceedings of the 3rd IEEE/ACM International Conference on Big Data Computing, Applications and Technologies (pp. 100–106).

Snowflake, 2021. Retrieved May 19, 2021 form https://www.snowflake.com/workloads/data-sharing/

Soares, S. (2012). Big data governance. Information Asset, LLC.

Tallon, P. P. (2013). Corporate governance of big data: Perspectives on value, risk, and cost. Computer, 46(6), 32–38.

Thomas, G. (2006). The dgi data governance framework: The data governance institute. Orlando: FL.

Thusoo, A. (2017). Creating a data-driven enterprise with DataOps: Insights from Facebook, Uber, LinkedIn, Twitter, and EBay. O’Reilly Media.

Ubale Swapnaja, A., Modani Dattatray, G., & Apte Sulabha, S. (2014). Analysis of dac mac rbac access control based models for security. International Journal of Computer Applications, 104(5), 6–13.

van den Broek, T., & van Veenstra, A. F. (2018). Governance of big data collaborations: How to balance regulatory compliance and disruptive innovation. Technological Forecasting and Social Change, 129, 330–338.

Wang, J., Crawl, D., Purawat, S., Nguyen, M., & Altintas, I. (2015). Big data provenance: Challenges, state of the art and opportunities. In 2015 IEEE International Conference on Big Data (Big Data) (pp. 2509–2516). IEEE.

Xia, Q., Sifah, E. B., Asamoah, K. O., Gao, J., Du, X., & Guizani, M. (2017). Medshare: Trust-less medical data sharing among cloud service providers via blockchain. IEEE Access, 5, 14757–14767.

Zillner, S., Curry, E., Metzger, A., Auer, S., & Seidl, R. (2017). European big data value strategic research & innovation agenda. Big Data Value Association.

Zillner, S., Bisset, D., Milano, M., Curry, E., Södergård, C., Tuikka, T., et al. (2020). Strategic research, innovation and deployment agenda: Ai, data and robotics partnership.

Acknowledgements

The work presented in this chapter has been partially supported by the SPRI—Basque Government through their ELKARTEK program (DAEKIN project, ref.KK-2020/00035).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Torre-Bastida, A.I., Gil, G., Miñón, R., Díaz-de-Arcaya, J. (2022). Technological Perspective of Data Governance in Data Space Ecosystems. In: Curry, E., Scerri, S., Tuikka, T. (eds) Data Spaces . Springer, Cham. https://doi.org/10.1007/978-3-030-98636-0_4

Download citation

DOI: https://doi.org/10.1007/978-3-030-98636-0_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-98635-3

Online ISBN: 978-3-030-98636-0

eBook Packages: Computer ScienceComputer Science (R0)